cross-posted from: https://lemmy.dbzer0.com/post/26278528

I’m running my media server with a 36tb raid5 array with 3 disks, so I do have some resilience to drives failing. But currently can only afford to loose a single drive at a time, which got me thinking about backups. Normally I’d just do a backup to my NAS, but that quickly gets ridiculous for me with the size of my library, which is significantly larger than my NAS storage of only a few tb. And buying cloud storage is much too expensive for my liking with these amounts of storage.

Do you backup only the most valuable parts of your library?

I’m crossposting because some comments were interesting…

I live dangerously. Ive no backups.

I tip my fedora at you sir

4 bay nas 22tb drives = 88tb as a single volume. Backed up to offline single storage drives that are timestamped. Anything newer than that timestamp gets rsynced/robocopied to a new drive until it’s full., update timestamp, swap drive and rinse repeat. If a drive fails, just replace it and copy the contents of the backup drives to master and restored. Alternatively you can set a single disk tolerance raid.

Synology NAS

Edit: plus two SSD backups. I need to re-engage Backblaze for offsite.

an unholy ensemble of 4 external drives and 2 cloud storage providers managed with git annex

capacity: 3 TB + “pay as you go”

available: 1TB

used: 1.01TB

the drives were originally 5, then 1 failed and added the “pay as you go” s3 provider to pick up the slack

git annex handles redundancy and versioning, i configured it to keep 2 copies of every file across the 4 drives and the s3 provider, then i got 1tb of free onedrive space from my university and i’m in the process of making a full backup of everything in there

not really backup as much as redundant storage, but i think it’s close enoughif anyone wants to roast my setup please do

Used to use rclone encrypted to gsuite, until that gravy train ran out lol.

Now I’ve got 3 Unraid boxes (2 on site, 3rd off) and a metric shitton of drives I’ve accumulated over the years.

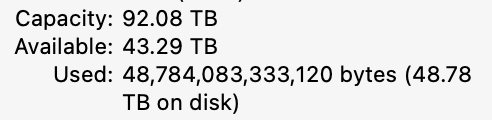

Unraid checking in. 150TB ish. Dual parity for media files on XFS drives. Can lose 2 drives before array data is at risk, and even then if a drive fails, I’d only lose the data on that drive not the entire array.

Important data is on ab encrypted ZFS array which is snapshotted and replicated hourly to a separate backup server and replicated one more time weekly to 3.5” archive drives which are swapped out to a safe deposit box every few months.

I used to use rsync to do it but snapshot replication with sanoid/syncoid does the same thing in a tiny fraction of the time by just sending snapshot deltas rather than having to compare each file.