the crypto scam ended when the AI scam started. AI conveniently uses the same/similar hardware that crypto used before the bubble burst.

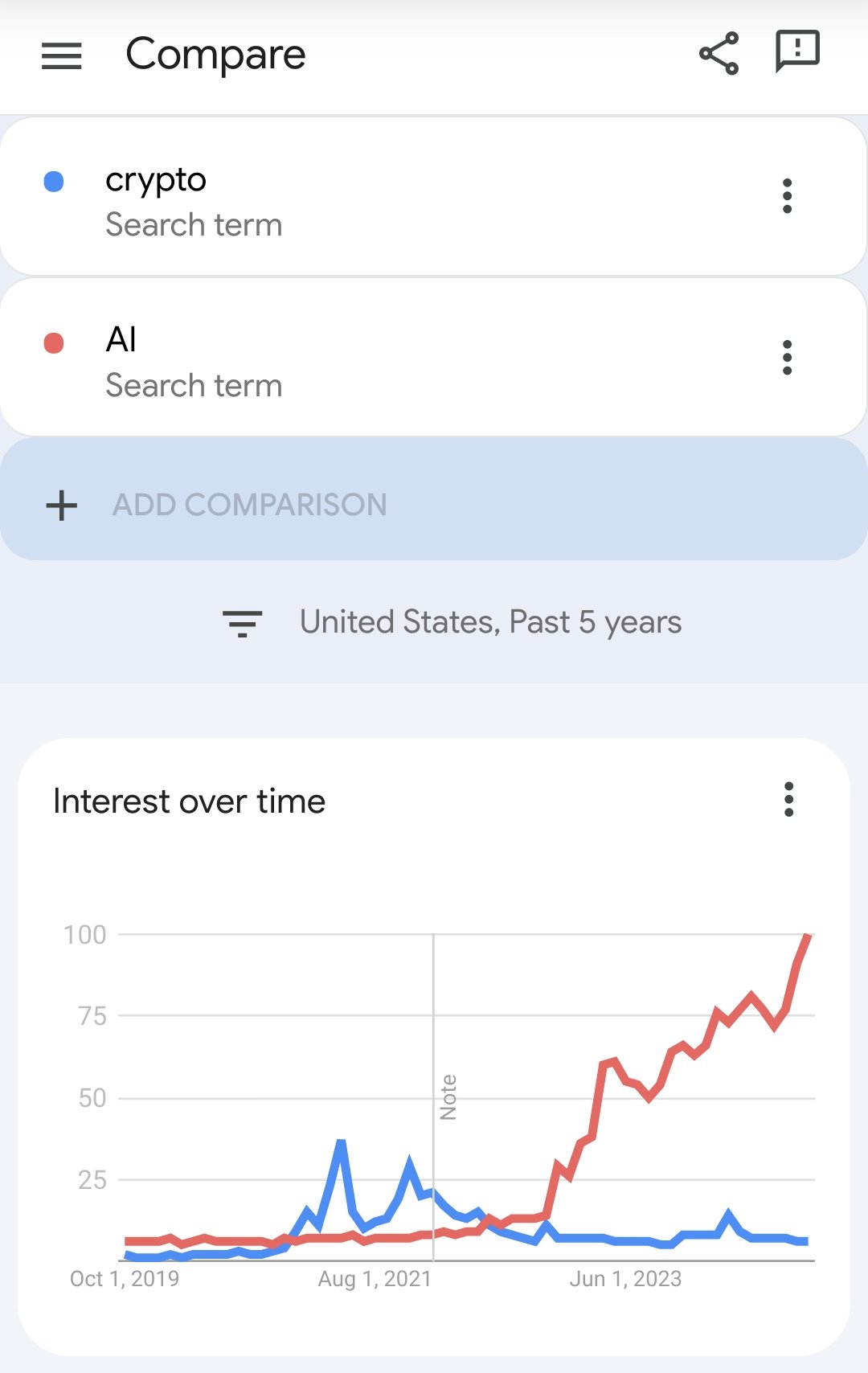

that not enough? take a look at this google trends that shows when interest in crypto died AI took off.

so yeah, there’s a lot more that connects the two than what you’d like people to believe.

had this happen to me at a conference. I didn’t realize they were going to put where I worked at on my nametag so I spent three days walking around as the guy who worked at “some dumbass company”.

it went over surprising well though and was a great icebreaker that landed me an interview for another job.