- 17 Posts

- 119 Comments

1·3 months ago

1·3 months agoMatrix seemed interesting right until I got to self hosting it. Then, getting to know it from up close, and the absolute trainwreck that the protocol is, made me love XMPP. Matrix has no excuse for being so messy and fragile at this point. You do you, but I decided that it isn’t worth my sysadmin time (especially when something like ejabberd is practically fire and forget).

1·4 months ago

1·4 months agoI don’t think our views are so incompatible, I just think there are two conflictual paradigms supporting a false dichotomy: one that’s prevalent in the business world where “cost of labour shrinks cost of hardware” and where it’s acceptable to trade some (= a lot of) efficiency for convenience/saving manhours. But this is the “self-hosted” community, where people are running things on their own hardware, often in their own house, paying the high price of inefficiency very directly (electricity costs, less living space, more heat/noise, etc).

And docker is absolutely fine and relevant in this space, but only when “done right”, i.e. when containers are not just spun up as isolated black boxes, but carefully organized as to avoid overlapping services and resources wastage, in which case managing containers ends-up requiring more effort, not less.

But this is absolutely not what you suggest. What you suggest would have a much greater wastage impact than “few percent of cpu usage or a little bit of ram”, because essentially you propose for every container to ship its own web server, application server, database, etc… We are no longer talking “few percent” of overhead of the container stack, we are talking “whole new machines” software and compute requirements.

So, in short, I don’t think there’s a very large overlap between the business world throwing money at their problems and the self-hosting community, and so the behaviours are different (there’s more than one way to use containers, and my observation is that it goes very differently in either). I’m also not hostile to containers in general, but they cannot be recommended in good faith to self-hosters as a solution that is both efficient and convenient (you must pick one).

1·4 months ago

1·4 months agoHow does that compare to wallabag?

1·4 months ago

1·4 months agoI don’t care […] because it’s in the container or stack and doesn’t impact anything else running on the system.

This is obviously not how any of this works: down the line those stacks will very much add-up and compete against each other for CPU/memory/IO/…. That’s inherent to the physical nature of the hardware, its architecture and the finiteness of its resources. And here come the balancing act, it’s just unavoidable.

You may not notice it as the result of having too much hardware thrown at it, I wouldn’t exactly call this a winning strategy long term, and especially not in the context of self-hosting where you directly foot the bill.

Moreover, those server components which you are needlessly multiplying (web servers, databases, application runtimes, …) have spent decades optimizing for resource pooling (with shared buffers, caching, event scheduling, …). These efforts are all thrown away when run for a single client/container further lowering (and quite drastically at that) the headroom for optimization and scaling.

1·4 months ago

1·4 months agoThat’s… a tool in the bucket for that. But I’m not really sure that’s the point here?

3·4 months ago

3·4 months agoI don’t think containers are bad, nor that the performance lost in abstractions really is significant. I just think that running multiple services on a physical machine is a delicate balancing act that requires knowledge of what’s truly going on, and careful sharing of resources, sometimes across containers. By the time you’ve reached that point (and know what every container does and how its services are set-up), you’ve defeated the main reason why many people use containers in the first place (just to fire and forget black boxes that just work, mostly), and only added layers of tooling and complexity between yourself and what’s going on.

With only one having your interests at heart. An easy choice.

11·4 months ago

11·4 months agoTu veux dire, après n’avoir rien fait quand le précédent dumping chinois a fait couler l’industrie photovoltaïque allemande ? Notre transition énergétique se porte en effet bien mieux d’un monopole et de nos capitaux confiés à une puissance à l’autre bout du monde…

1·4 months ago

1·4 months agoThe UI of Prusa slicer is hot garbage though.

I give orca/bambu the edge for “prettier on screenshots”, but in practice, I don’t find their UI paradigm to be more efficient nor convenient.

4·7 months ago

4·7 months agoHow about nextcloud with only the bare minimum amount of plugins? Filles alone is pretty snappy.

1·7 months ago

1·7 months agoPydio used to be called ajaxplorer and was a pretty solid and lightweight (although featureful) solution, but then they rewrote the UI with lots of misguided choices (touch controls and android inspired interactions on desktop devices) and it became so horrendous, heavy and clunky that I almost forgot about it. I wonder if they reversed the trend (but from the screenshots it doesn’t look so).

2·7 months ago

2·7 months agoAren’t they not the same thing at all?

4·8 months ago

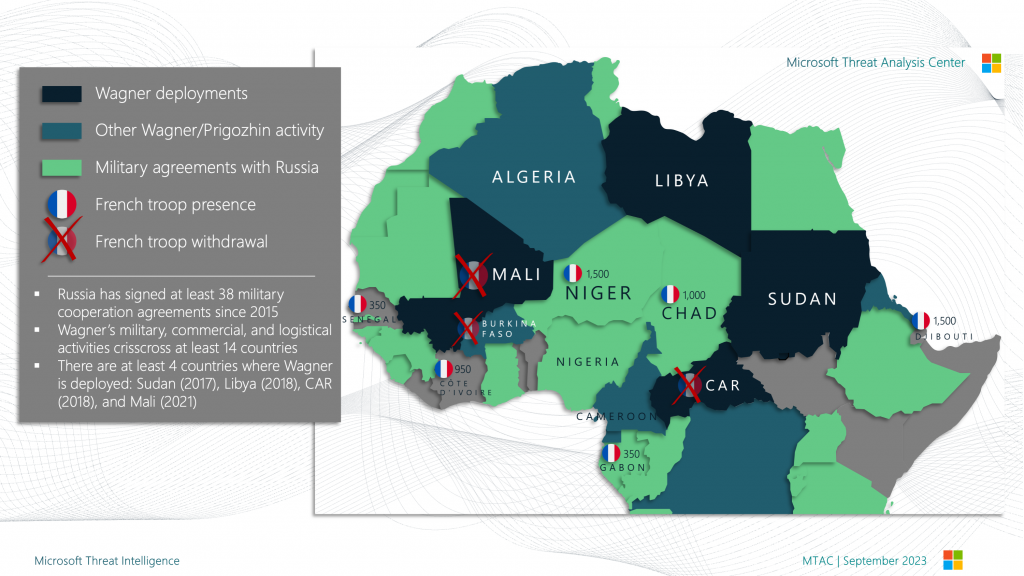

4·8 months agoRussia supplied 77 per cent of China’s purchases

Not exactly a surprise, then. And good luck for the Russian’s arm industry bouncing back, considering its performance on the battlefield and its interleaving with western tech that it hasn’t managed to decouple itself from since 2014. China’s only taking a reasonable stance there.

2·8 months ago

2·8 months agoand how much of this troubled history is linked to Java Applets/native browsers extensions, and how much of it is relevant today?

52·8 months ago

52·8 months agoYep but:

-

it’s one runtime, so patching a CVE patches it for all programs (vs patching each and every program individually)

-

graalvm is taking care of enabling java to run on java

-

61·8 months ago

61·8 months agoOr rather a Dunning Kruger issue: seniors having spent a significant time architecturing and debugging complex applications tend to be big proponents for things like rust.

135·8 months ago

135·8 months agoWhy? What’s wrong with safe, managed and fast languages?

20·8 months ago

20·8 months agoI agree with the sentiment and everything, but the whole gaming console industry has gone to crap after they started putting hard drives/storage in them with the goal of needing you to be online and not owning anything anymore. They are all equally despicable for that. Which makes emulation even more essential, just for preserving those games into the future when the online front will inexorably shut down.

41·9 months ago

41·9 months agoI’m with you. Hg-git still is to this day the best git UI I know…

I’m not a cryptographer, and so I can’t really emit a judgement on the poster’s abilities or reputation, but what’s for sure is that this piece reads more like a bingo card of a person’s favourite “crypto stuff” and how partially it overlaps with some characteristics of OMEMO, rather than a thorough and substantiated cryptanalysis of the protocol and its flaws for real-world usages and threats.

Some snarky remarks remarks like

are needlessly opinionated, inflammatory and unhelpful, and tell more about the author and their lack of due diligence (in reaching-out to people and reading past public discussions) than build a story of what the problem is, why it matters, and how to remediate it.

Don’t get me wrong, I would love this piece to have been something else, and to reveal actual problems (which incidentally would have been a great boos to the author’s credibility and fame, considering that OMEMO underwent several audits and assessments in the recent history, including by several state agencies in the German and French governments…), but here we are, with one more strongly opinionated piece of whatever on the internet, and no meat in it to make the world a better place.